For teams launching a video product, cloud-native delivery is often the default choice. Object storage combined with a managed CDN promises instant global reach, minimal upfront planning, and a pay-as-you-go model that feels perfectly aligned with early-stage uncertainty.

The appeal is real:

- no hardware decisions

- no capacity planning

- no long-term commitments.

Upload your files, put a CDN in front, and video starts flowing. For small catalogs and moderate traffic, this approach works well, and that initial success reinforces the assumption that it will continue to scale linearly.

The problem is that video traffic does not scale linearly, and cloud economics are not designed around sustained, high-volume delivery.

The hidden cost curve of video traffic in hyperscale clouds

Cloud platforms are optimized for flexibility, not for long-running, bandwidth-heavy workloads. Video flips the usual cost structure on its head: storage becomes cheap, while data movement becomes dominant.

As viewership grows, costs don’t rise smoothly they bend upward:

- more regions mean more replication,

- more viewers mean more egress,

- spikes multiply both at once.

What looks inexpensive at low volume can become the largest line item in the infrastructure budget once video becomes core to the product.

Egress pricing vs predictable bandwidth

In cloud-native setups, outbound traffic is the real cost driver. Every gigabyte delivered to viewers is metered, often at rates that feel manageable individually but compound quickly at scale.

This creates several issues:

- Budgeting becomes reactive rather than planned

- Sudden popularity translates directly into surprise bills

- Optimizing delivery becomes a financial exercise, not just a technical one.

Infrastructure-first CDNs approach this differently. Bandwidth is provisioned and priced predictably. Costs are tied to capacity, not to moment-to-moment popularity. For video platforms, this predictability often matters more than theoretical elasticity.

Cold-start penalties and multi-region replication limits

Cloud CDNs rely heavily on cache-on-demand behavior. When a video is requested for the first time in a region, it must be fetched from origin storage, often located far away.

For video, this creates two problems:

- cold-start latency, which delays playback for early viewers,

- replication inefficiency, where large assets are copied reactively rather than strategically.

Multi-region replication can reduce this, but it introduces new tradeoffs:

- Higher storage costs

- Complex lifecycle management

- Still no guarantee that replicas exist where demand actually emerges.

The result is a system that reacts to demand rather than anticipating it.

Operational opacity: routing, throttling, and “black box” behavior

One of the less visible challenges of cloud-native delivery is a lack of transparency.

Key decisions such as:

- Which edge node serves a request

- How traffic is throttled under load

- How congestion is handled internally is abstracted away.

This is convenient until something goes wrong. When startup times increase or buffering appears during peak events, teams often have limited insight into why it happened or how to influence behavior.

For video workloads, where performance issues are immediately user-visible, this opacity can be a serious operational handicap.

When cloud-native delivery stops scaling economically?

Cloud-native delivery usually stops making sense at a specific inflection point:

- When video becomes the dominant traffic class

- When peak events matter more than average load

- When margins depend on delivery efficiency

At this stage, teams often realize that they are paying for flexibility they no longer need, while lacking control where it matters most. The system still scales technically but not economically.

How infrastructure-first CDNs complement or replace cloud delivery?

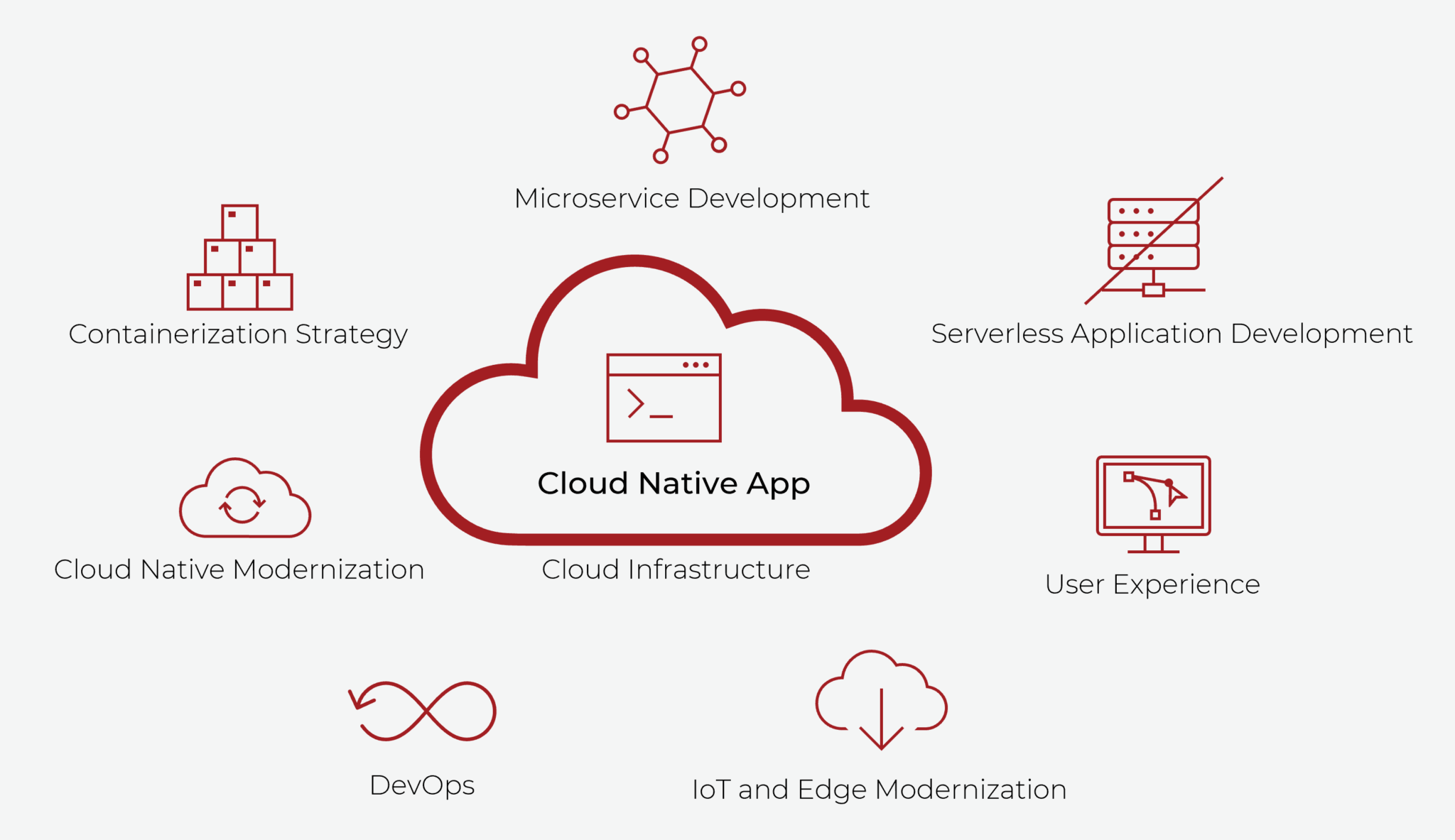

Infrastructure-first CDNs are built around different assumptions:

- Video traffic is continuous and heavy.

- Throughput matters more than burst elasticity

- Control and predictability outweigh abstraction

Instead of relying on opaque, shared platforms, these CDNs:

- Provide real capacity in advance.

- Steer traffic intelligently based on live conditions.

- Operate delivery nodes at high, safe utilization.

For many platforms, this doesn’t mean abandoning the cloud entirely. Cloud storage may remain part of ingestion or archival workflows, while video delivery shifts to infrastructure designed for sustained load.

Migration patterns: hybrid, partial offload, full transition

In practice, teams move away from cloud-native delivery in stages:

- Hybrid delivery. Cloud storage remains the source of truth, while an external video CDN handles delivery to viewers.

- Partial offload. High-traffic or latency-sensitive content is moved to an infrastructure-first CDN, while long-tail or low-volume assets stay in the cloud.

- Full transition. Video delivery, storage, and networking are consolidated into a dedicated infrastructure stack with predictable costs and direct operational control.

Each path reflects different priorities, but the direction is consistent: as video grows, infrastructure becomes a competitive advantage rather than a hidden cost.

Ready to move beyond cloud-native video delivery?

If your platform is starting to feel the limits of cloud storage and cloud CDN economics, rising egress bills, unpredictable performance during spikes, or a lack of control over routing and capacity, Advanced Hosting can help.

Our Video CDN is built on owned infrastructure, high-capacity networks, and demand-driven placement, allowing teams to deliver video at scale with predictable costs and transparent behavior.

We work closely with engineers to evaluate current delivery patterns, design an efficient migration path (hybrid or full), and tune performance for real-world peak traffic, not just averages.

Reach out to discuss your workload, and we’ll help you determine whether an infrastructure-first video CDN is the right next step for your platform.